![D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning](https://external-preview.redd.it/7lLQAM6QKl67UsD5ElJ1PF7GwjblZnPcXgdqHv64b_A.jpg?width=640&crop=smart&auto=webp&s=03b8c9244d7278f8daf9813ac72d3a76008021bd)

D] Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple : r/MachineLearning

NVIDIA's Answer: Bringing GPUs to More Than CNNs - Intel's Xeon Cascade Lake vs. NVIDIA Turing: An Analysis in AI

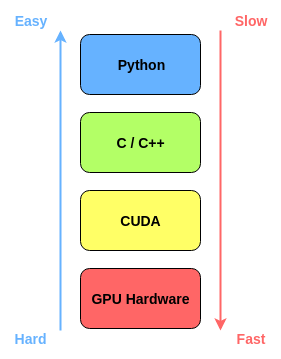

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

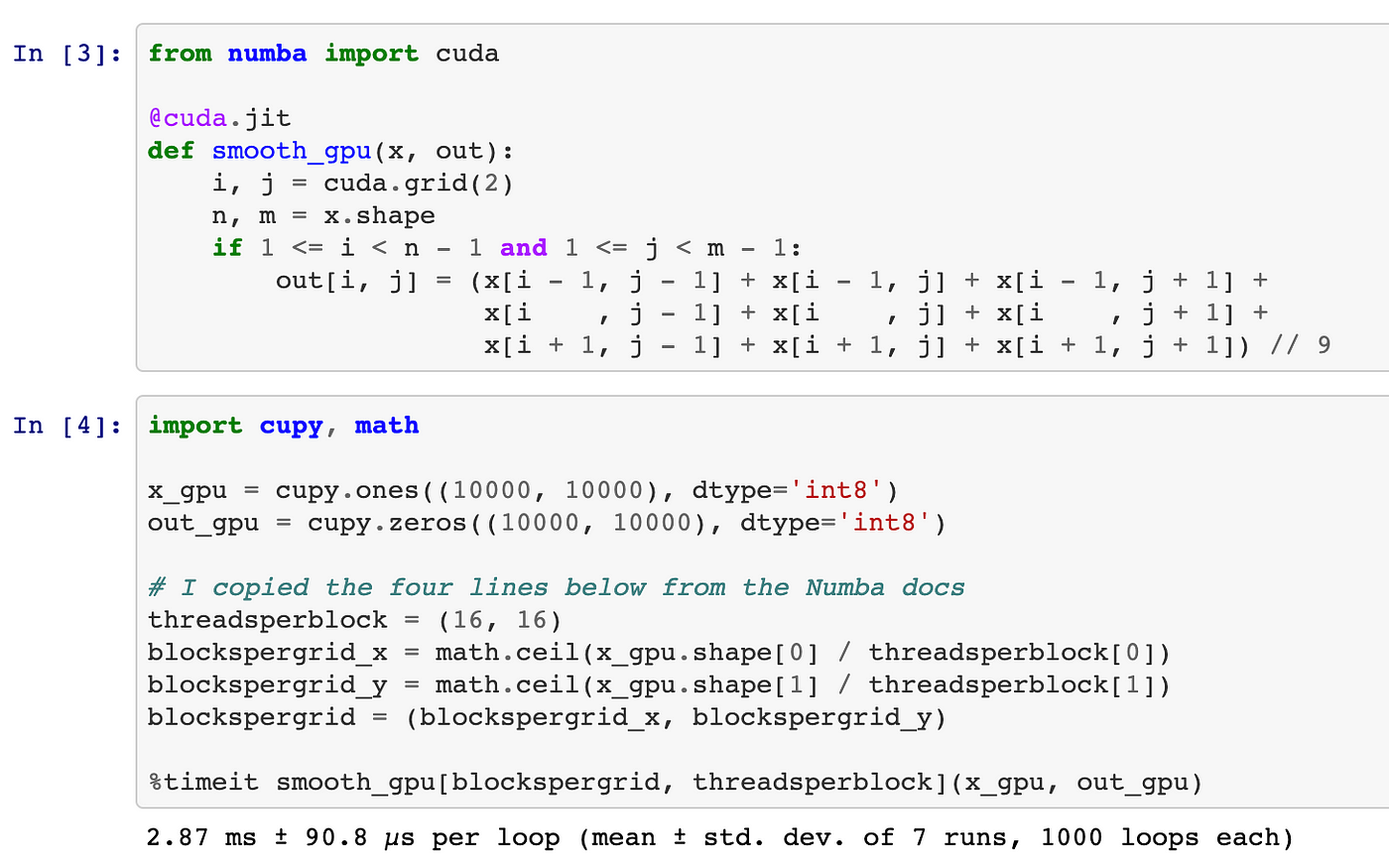

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

GitHub - meghshukla/CUDA-Python-GPU-Acceleration-MaximumLikelihood-RelaxationLabelling: GUI implementation with CUDA kernels and Numba to facilitate parallel execution of Maximum Likelihood and Relaxation Labelling algorithms in Python 3